Nearly every company has a test or development environment that is often less secure than production, and many of those have a failed audit to show for it. The sense of relaxed security comes from the feeling that developers will need a bit of forgiveness when authority is handed out. In fixing these audits, many will focus on the authority but forget about the data. But where does this data come from? Production.

Who exactly has eyes on your PII?

Most will build their test databases from an extract of production and not think anything of it. Often the thought is that developers need real data and anything else will give false results in testing. What these audits are finding is the PII data is now exposed to persons that may not be authorized to see production data. In these less secure environments, you also must worry about external connections. Does another test system access this data? Is this data extract sent to multiple databases? Do you have a team that has less authorization to PII data? If yes, then this is where DOT Anonymizer from ARCAD Software comes in.

Bring your systems out of audit jail

Now the first thing you will think is, I can just do this in-house. I’ll just have a few programs written and let magic happen. Hate to be the one to break it to you, it doesn’t work like that. Odds are your shop is low on resources and time. Maybe you don’t really think it’s worth the effort. Well, as experience tells, that will be a bad idea.

Why not let DOT Anonymizer handle the heavy lifting and bring your test and development systems out of audit jail.

With DOT Anonymizer, you can have multiple Databases, across multiple Operating Systems, and even flat or unstructured files. DOT Anonymizer can be placed into your current ETL (Extract, Transfer, Load) process where it can be run via a schedule, manually, or run from tools like Jenkins via CLI.

In protecting this data in test, you can save yourself from not only fines, which can be upwards of $50K USD per violation but save millions in the actual cost of the data loss.

Detecting and Masking personal data - irreversibly

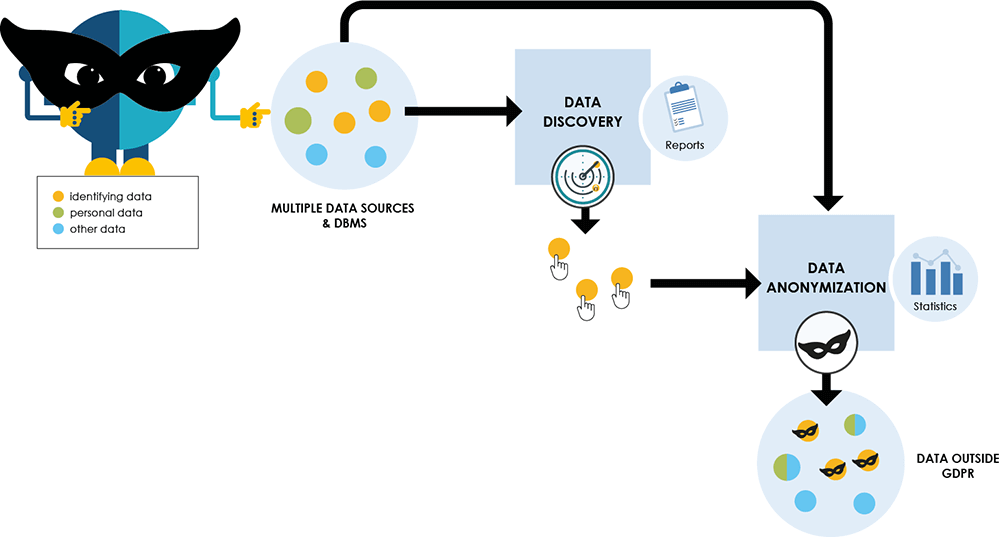

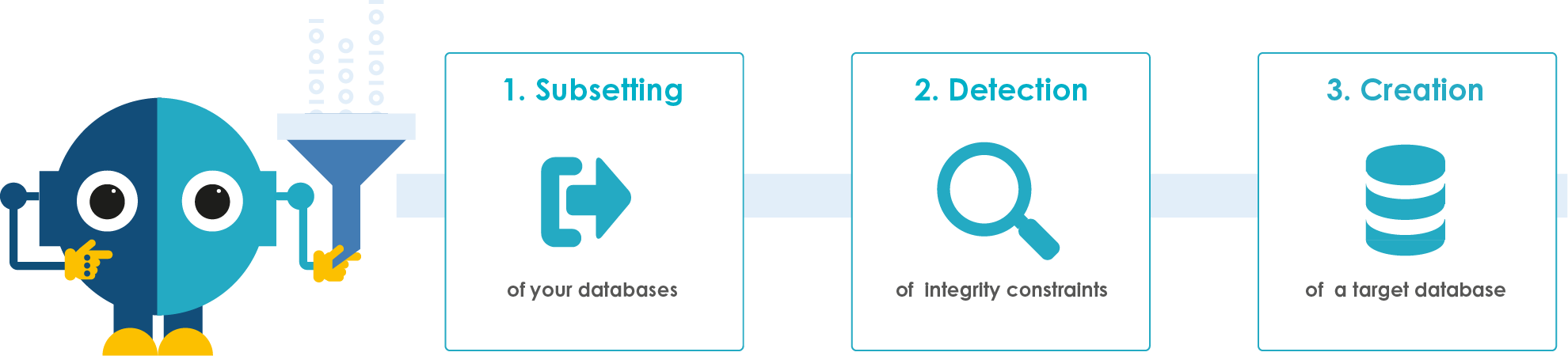

When looking at this data, often the sheer scope of the data can be overwhelming. Again, this is where DOT Anonymizer will shine. DOT Anonymizer will Detect your data, Mask your data, and Subset your data, or any part of this that you really need.

The hard work of identifying the data to be reviewed is handled by 100’s of predefined rules or you can create custom rules with Groovy script or regular expression. This finds your phone numbers, the Dates of Birth, Names, and so on. Then a rule will be assigned to that field for later masking options. The nice part of this process is you don’t have to know all your data from memory. If the process finds a field that looks like PII, it will be identified. So you don’t have to worry about missing that misnamed field that really houses a Social Security Number, DOT-Anonymizer will find it for you. As you see below, this detection is the first step in the process. Don’t forget, this can be run against multiple sources, so your project has a one-stop shop to protect your data.

Handling the homonyms

Now that you have decided on the caching, it can be on or off, it’s time to make magic happen. Or is it? Let me make an argument for caching. I’m a huge fan of the caching aspect of DOT Anonymizer. To see it in action is impressive. John Smith or Alan Ashley in 5 tables located on 3 databases ranging from MySQL, DB2 on IBM i, or Oracle can all be made to stay consistent. This way Alan Ashley becomes Geri Jones in all the tables. So when you run the application, the name will fit right in and produce proper reports. Without caching, Alan Ashley will become 5 different persons. That in no way will show the results most will be looking for. With this option, you can select it to not cache select changes. Maybe you don’t care if the address is different everywhere or the zip code is different. You have the freedom to run it how you wish.

Subsetting test data – with referential integrity

Lastly or firstly, depending on your ETL process, DOT Anonymizer can help you build your test extract. To me, this is one of the hardest aspects in building test data. One would think it’s straight forward, but when you get into the depths of the data, it can be rather complex. For example, you have an application with 5 tables, each table has some relation to the others. So how do you ensure data integrity constraints? You have millions of records, but your extract is only 1000 records. Sure, you can write a program, pull the data from keyed fields, and so on. But as stated earlier, odds are you are short of time and resources. The extract process will detect these relations so that John Smith and all of John Smith’s data comes across, while also maintaining the masking of the data.

Another aspect many may not realize, when you have multiple test groups going, they will step on each other. This extract process can create new databases for each test group. Remember, all the data constraints are carried over and masked.

In a nutshell, you can spend hours and dollars on developing your own code and process or you can drop the DOT Anonymizer product into your environment and allow it to do the heavy lifting. That counts today when security is a top concern but one that is often pushed to the back of the queue!

Alan Ashley

Solution Architect, ARCAD Software

Alan has been in support and promotion of the IBM i platform for over 30 years and is the Presales Consultant for DevOps on IBM i role with ARCAD Software. Prior to joining ARCAD Software, he spent many years in multiple roles within IBM from supporting customers through HA to DR to Application promotion to migrations of the IBM i to the cloud. In those roles, he saw first hand the pains many have with Application Lifecycle Management, modernization, and data protection. His passion in those areas fits right in with the ARCAD suite of products.